This was a project I worked on extensively at the start of my PhD and built out infrastructure for over the course of about 3 years. Unfortunately, the project never got fully funded so I didn’t get to see it through to fruition. But I developed some cool software for it and want to show it off!

Teleoperation in Virtual Environments

Teleoperation is a common robot control paradigm where a human user commands a robot using an input device. The input device could be as simple as keyboard button presses, and as advanced as a specially designed control mechanism or even another robot moved about in gravity compensation mode. This is in contrast to a robot operating autonomously where it is deciding its own actions and executing motions without human direction. Teleoperation is common for recording data that can be used to learn autonomous policies from. It is also common for simply having a robot controlled by a human, e.g. operating a rescue robot at a disaster site.

I was exploring a project where policy learning could be done in a virtual simulation environment. The basic idea was a human user could teleoperate a virtual robot in a virtual environment, and learn a policy that could then be deployed to a real robot in a real environment. The kicker is we wanted to incorporate force feedback in the virtual environment, so that we could learn force-aware policies to deploy to the real robot, in hopes of learning more robust policies that could account for interaction forces in the environment.

To achieve this, we wanted a way to physcially render force feedback to the user as they engage with the virtual environment. We decided to use a Phantom Omni haptic input device. This enabled the user to move a stylus around in 3D and feel force resistance from the device. The challenge for me was to figure out how to compute forces in the virtual world, and how to render them to the user within a framework for policy learning and deployment. I’ll go into some of the more interesting details below.

Software Development

| Repository | Description |

|---|---|

| ll4ma_teleop | Package for teleoperating virtual and real robots with haptic input devices, including sending commands, rendering virtual environments, and an rviz plugin. |

| phantom_omni | A fork of fsuarez6/phantom_omni where I had to make several URDF modifications and some driver modifications to get our device working in the lab. |

| dart_ros | A package I developed to use a DART simulation physics backend to compute physics for a simulator interface in rviz. |

Virtual Environment

I wanted a relatively lightweight simulation environment where I could have some control over force rendering, as we were planning on experimenting with different approaches for that. I opted to use rviz as a visual renderer of the scene, primarily because I was already experienced with it and it had all of the basic visualizations and plugin interfaces we needed. I chose to use DART as the backend physics engine, as it had proven to be the superior physics engine in Gazebo for contact dynamics in manipulation tasks. (Note, this was before the proliferation of better physics simulators for robotics like NVIDIA Isaac and friends.)

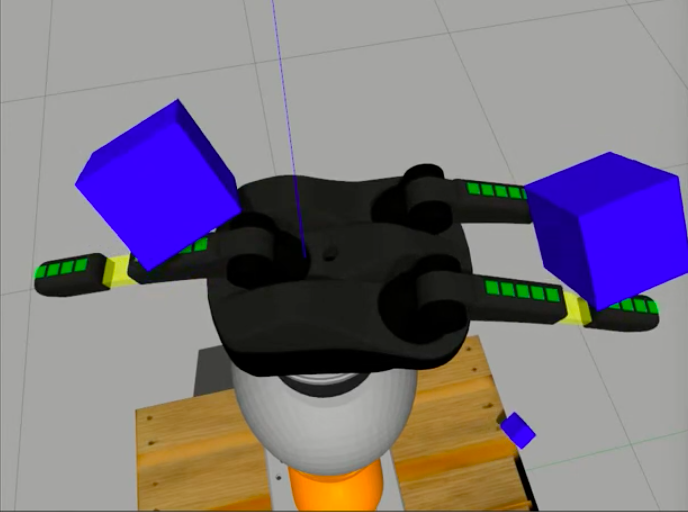

I set things up so that the robot and objects in the scene were represented in the backend DART physics simulation, as well as rendered to rviz by reading out the current state from the simulator and setting poses accordingly so that objects could be rendered with a MarkerArray in rviz. I relied heavily on ROS (as usual) to keep things in sync by publishing state data over ROS topics, and I exposed several ROS services for performing various utility actions like resetting the simulation environment back to its nominal state.

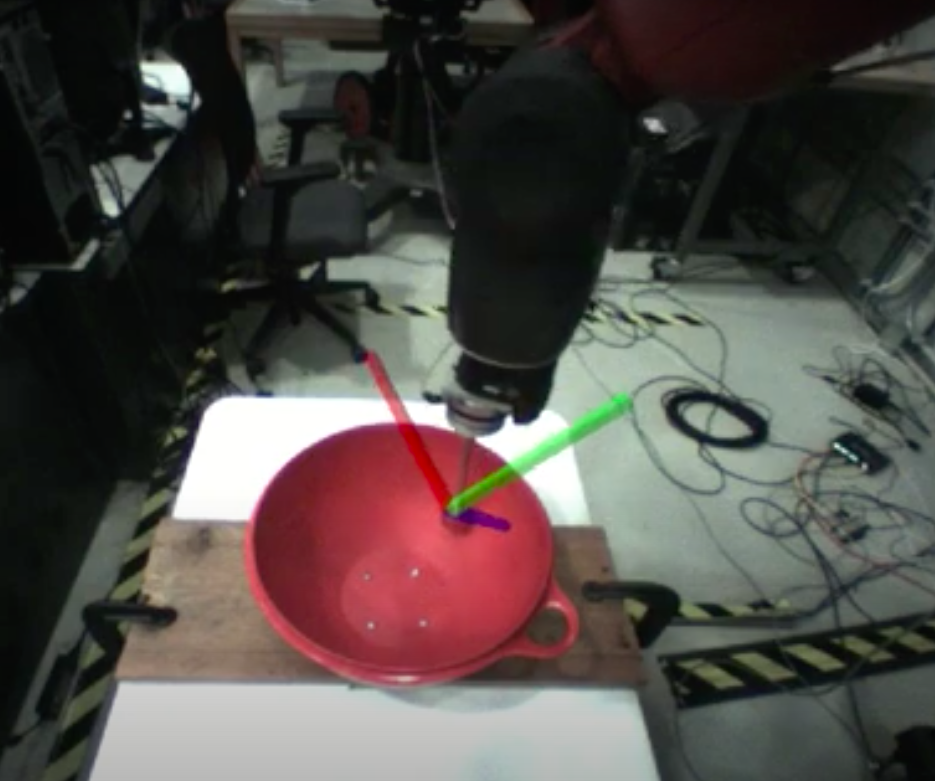

In order to render forces (more on that below), I used the DART simulation state to compute net forces acting on the robot’s end-effector. DART offered CollisionResult objects from which you could find all of the collision bodies colliding with the body of interest, and sum up the net forces acting on it. This could be done efficiently enough that I could run it in a real-time loop and render it to the haptic device. For the simple interactions I was performing in the simulator, it provided a very intuitive force rendering for the haptic device.

You can see an example of the computed force vectors during contact here:

Force Rendering to a Haptic Input Device

We used the Phantom Omni haptic input device to control the robot’s end-effector. I setup a task space controller for the robot such that you could move the input device’s stylus around in 3D space and the robot’s end-effector would move in a similar fashion. Since I was able to compute the forces acting on the robot’s end-effector (e.g. when it would touch the table or an object in the scene), I could send those as forces to command to the haptic device (doing a little transform math to ensure they’re rendered correctly). The haptic device driver would then take those commands and figure out the appropriate way to drive the device’s motors so that the forces were rendered to the user correctly. The end result is a little magical, even though I coded the whole pipeline and knew exactly how it worked, I was consistently amazed by the experience of feeling forces in the real world while commanding a robot in a virtual world.

The final version with the rviz plugin is shown at the top of this page. I’m including a few prototype versions below which were just as fun. I experimented with different user interfaces since I found it difficult to perform some tasks when I had to worry about the full 3D motion of the robot’s end-effector. An effective strategy was to allow the user to select specific dimensions to control the robot’s motion while other dimensions were not controlled. For example, while sliding on a table it would be useful to only control motion in the XY plane of the table while disabling control for the Z dimension perpendicular to the table surface so that the robot keeps contact with the table and the user can focus on the motion in the plane.

Here’s an example where I do just that, directly controlling a robot in the Gazebo simulator using the Phantom Omni, disabling motion control in the Z direction (perpendicular to the table surface) once the robot is in contact and applying force, so that it can easily move along the XY plane of the table and maintain the applied force on the table.

Here is another example of controlling in Gazebo, where it was mimicking the task of sliding a book along the table, again the forces here are being rendered to the user controlling the robot with the Phantom Omni device.

This was a fun project to work on and was a big undertaking in software design and development to build out the necessary infrastructure to interface both with a simulator and real hardware. I learned a lot about the challenges of human-machine interfaces, particularly when it comes to working with force data which is inherently noisy and challenging to render in a way that matches a user’s expectations.