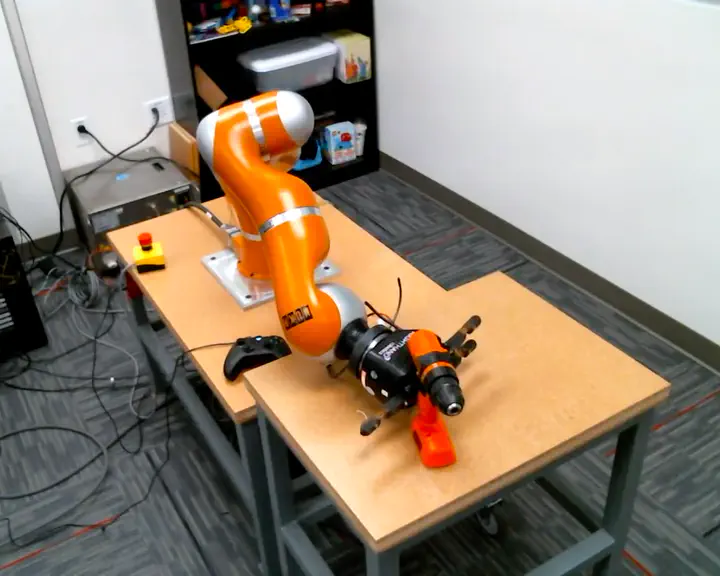

Real-Time Robot Control

We use the Orocos Real-Time Toolkit to control our KUKA LBR4+ arm, which ensures that our controllers meet the real-time requirements for the LBR4. The base implementation our lab started with comes from the lwr_hardware package from the Institut des Systèmes Intelligents et de Robotique (ISIR).

I have added to this framework by writing a controller manager that allows safe swapping of controllers at runtime. I use this to rapidly record kinesthetic demonstrations on the robot by switching between a gravity compensation controller (for moving the arm kinesthetically), a high stiffness joint controller (for keeping the robot stationary), a joint PD controller (for moving the robot to a nominal starting position), and a task space inverse dynamics controller (for executing task space policies).

This video shows the various transitions between controllers in a learning from kinesthetic demonstration setting, where controller switching is initiated by button presses on an Xbox One controller:

Here is a video of fully autonomous execution of a learned Probabilistic Movement Primitive policy using the joint PD controller:

The LBR4+ comes with a Fast Research Interface (FRI), which allows us to run our custom controllers and read the robot state through ROS. Something I didn’t like was having to work out bugs in my controllers and controller manager on the real robot. So, I created a simulated FRI so that I could run our Orocos controllers in Gazebo before going live on the real robot. The simulation FRI hooks into the Gazebo simulation directly to read the robot state, and accepts the torque command output from our controllers to interface with the ROS control hardware interfaces.